A sensor is a solid-state device which captures the light required to form a digital image.

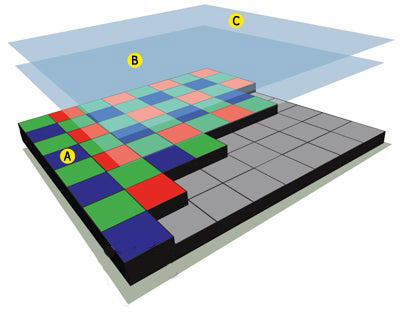

Basically digital sensors are very complex things with 3 dimensionality to them. The typical image sensors use in most digital cameras are comprised of many individual photosensors, all of which capture light. These photosensors are able to capture the intensity of light but not its wavelength (colour). As a result, image sensors are typically overlaid with something called a "colour filter array" or "colour filter mosaic." The most common type of filter is the Beyer filter array.

The Bayer filter was invented in 1974 by Bryce Bayer, an employee of Eastman Kodak. This overlay consists of many tiny filters that cover the known pixels and allow them to render colour information. You may sometimes hear Bayer's arrangement of microfilters referred to as RGGB. The human retina is naturally more sensitive to green light in daytime vision. Bayer used this knowledge when he selected his filter proportions ― which favour green light ― in an attempt to mimic our visual perception.That's because the arrangement uses a proportion of two green filter elements (GG) for each red (R) and blue (B) filter element. The entire array is spread over a 2x2 block of pixels, and each microfilter covers one-quarter of a pixel.

So each pixel receives input from all three primary colours, but they are not capable of outputting complete wavelength information since each pixel records only one of the three. To achieve an effective result a pixel recording green may be flanked by two pixels recording blue and two recording red. Together, these five total pixels provide information to estimate the full colour values for the green. Similarly, this complete colour value contributes to an estimate of the green value for the blues and reds surrounding it.

But this is not the only filter on the sensor. As the diagram shows there are more filters.

C – Infrared filter (hot mirror)

Camera sensors are sensitive to some infrared light. A hot mirror in between the lens and the low pass filter prevents this from reaching the sensor, and helps minimise any colour casts or other unwanted artefacts from forming. When a camera is adapted to become a full sensor camera this filter is removed.

The Problem with These Filters

Due to these colour and other filter arrays sensors have thick filter stacks, and these don't deal with a very steep angle of incidence very well because the filter stack "smears" the image details as they hit the sensor - light from the red pixel may smear into the green pixel, the IR filter can smear details, the pixel "well" itself may lose light that hits the side of the well and never reaches the bottom. Remember also that light consists of coloured waves of different wavelengths and all of these travel through a lens differently.

All of this creates a problem when mounting older lenses (especially wide-angle lenses) to mirrorless bodies. With a (non-retrofocal) wide-angle lens, the light can be traveling at a fairly steep angle of incidence when it reaches edges or (especially) corners of the sensor.

A wider throat makes room for a lens that doesn't need to project at such a steep angle to cover the corners of the sensor. When the light is traveling at a steep angle, you typically expect to see more vignetting, and in extreme cases you can get a rather strange rainbow effect toward the corners.